Bubble Bobble is a 1986 platform game developed and published by Taito for Japanese arcades (if you don’t know what an arcade is, first of all shame on you, then read this Wikipedia page).

Players control Bub and Bob, two dragons who set out to save their girlfriends from a world called the Cave of Monsters.

In each level, Bub and Bob must defeat every enemy present by trapping them in bubbles and popping them. The enemies will then turn into bonus items when they hit the ground.

There are 100 levels total, each becoming progressively more difficult.

If that doesn’t ring a bell and you don’t see the analogy, we are in trouble!

Are we in a bubble?

First question should be: which is the context? Innovation or speculation?

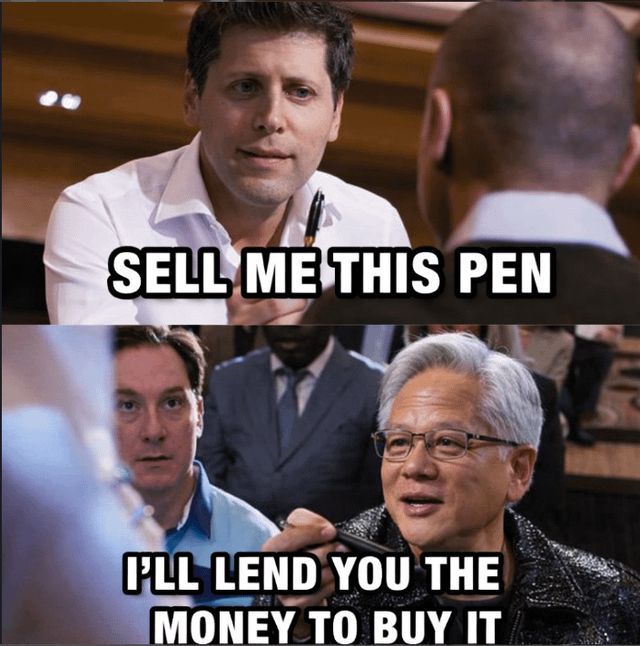

AI technology can generate speculative-growth equilibria. These are rational but fragile: elevated valuations support rapid capital accumulation, yet persist only as long as beliefs remain coordinated.

But when it comes to the innovation, key factors that no “final users” care but still have a lot to be clarified in terms of cost/sustainability are:

-

- Data Storage and Management

- Scalability and Flexibility

- Security and Compliance

- Cloud vs. On-Premises

- Integration with Existing Systems

- Maintenance and Monitoring

HAL 9000, the Three Laws of Robotics or people just scared of losing a job

When I was a 10-year old fat young boy (far before discovering basketball and sports) I was really into sci-fi movies and books, describing dystopian futures. Being at least concerned about the implications of AI is not something new for humans!

In 1942, Isaac Asimov created the Three Laws of Robotics as a foundational, fictional ethical framework designed to ensure safety, with the first law taking precedence:

-

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey orders given to it by human beings, except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

In 1968, Stanley Kubrick and Arthur C. Clarke with 2001: A Space Odyssey introduced to us HAL (Heuristically Programmed Algorithmic Computer) 9000, a sentient artificial general intelligence computer that controls the systems of the Discovery One spacecraft and interacts with the ship’s astronaut crew. HAL demonstrates a capacity for speech synthesis, speech recognition, facial recognition, natural language processing, lip reading, art appreciation, interpreting emotional behaviors, automated reasoning, spacecraft piloting, and computer chess.

All qualities that most of the humans don’t have nowadays.. but at the same time HAL is also listed as the 13th-greatest film villain in the AFI’s 100 Years of Heroes & Villains… so…

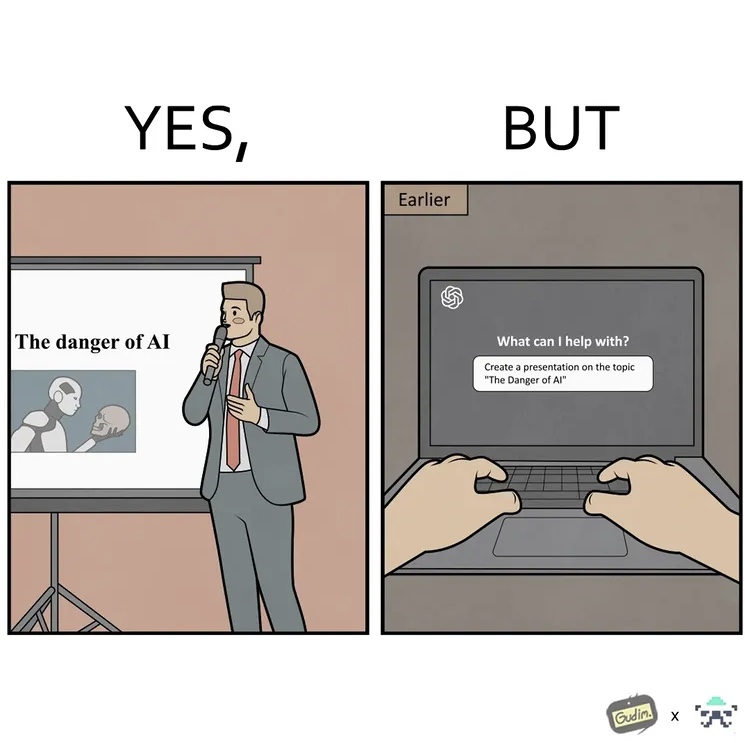

In 2026, we want AI to write for us business procedures to blindly trust in, we want the Harry Potter’s Elder Wand (also nicknamed “The Deathstick”, for a reason…) to create our projects and presentations in minutes, but then we want to complain that AI is stealing our job. Isn’t it foolish?

If I can summarize all these contradictions in just one sentence, I’m going to use a quote from Jerry Seinfeld that in a very recent interview stated:

We’re smart enough to invent AI, yet dumb enough to need it and so stupid we can’t even tell if we did the right thing

PS: hey, if you don’t know who Jerry is, go to Netflix NOW and watch all the 9 seasons of “Seinfeld”, a 10 Emmy and 3 Golden Globe Awards winner situation comedy television series, 1989-1998

—–

Stay data-hungry. Stay data-foolish.

Your Friendly Neighborhood Digital Consultant